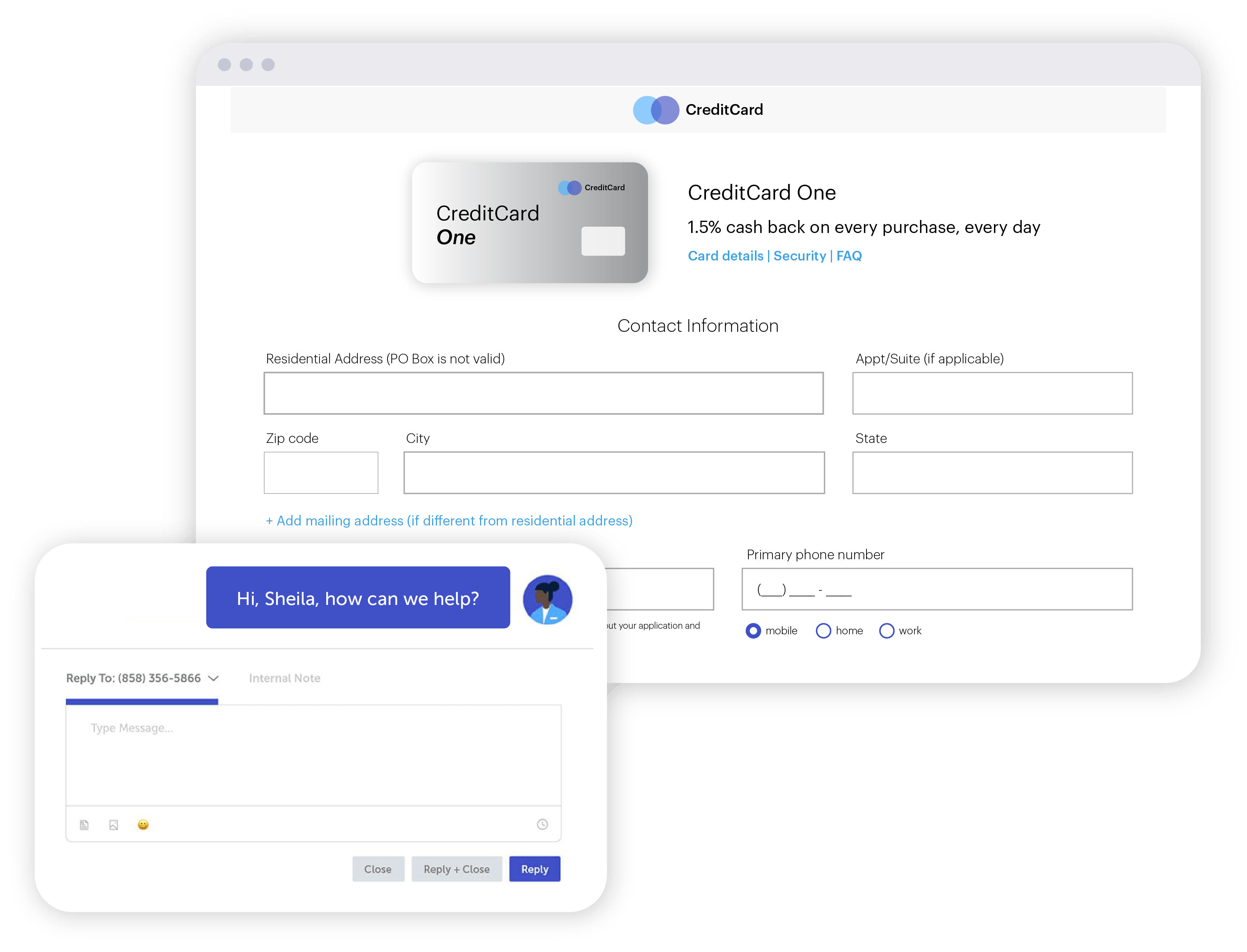

Delivering flawless digital experiences is essential to attracting and retaining online customers. But creating these experiences is tough when so many customers don’t provide any feedback on what was good or bad.

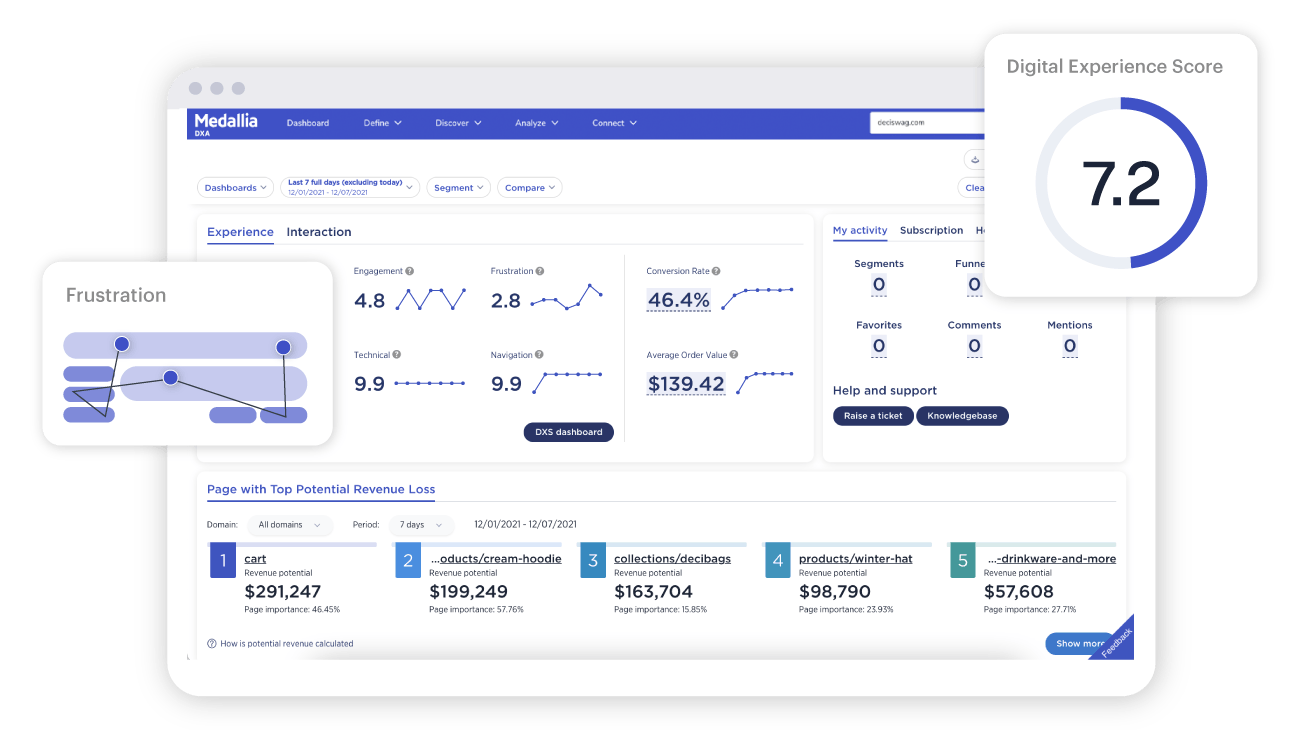

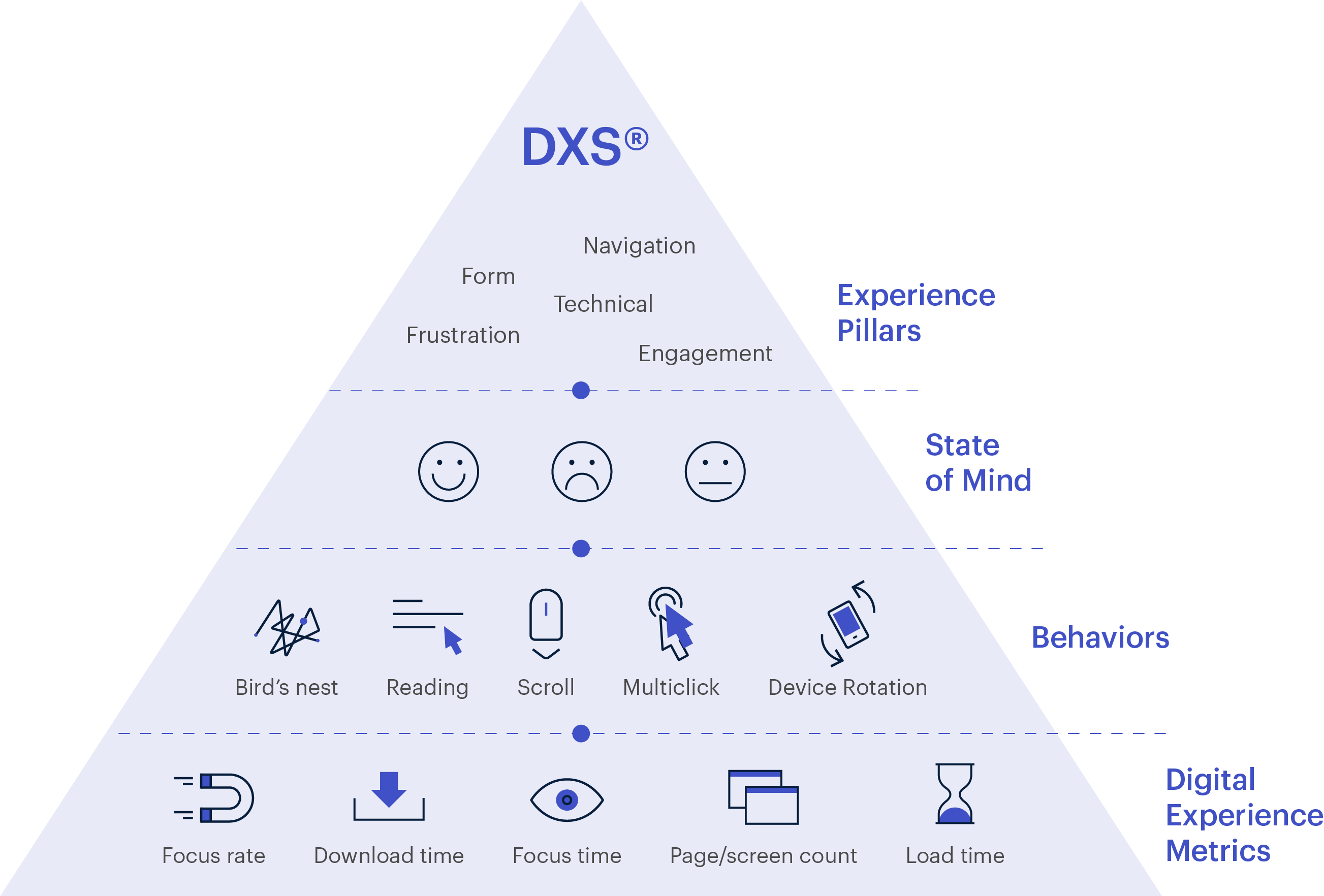

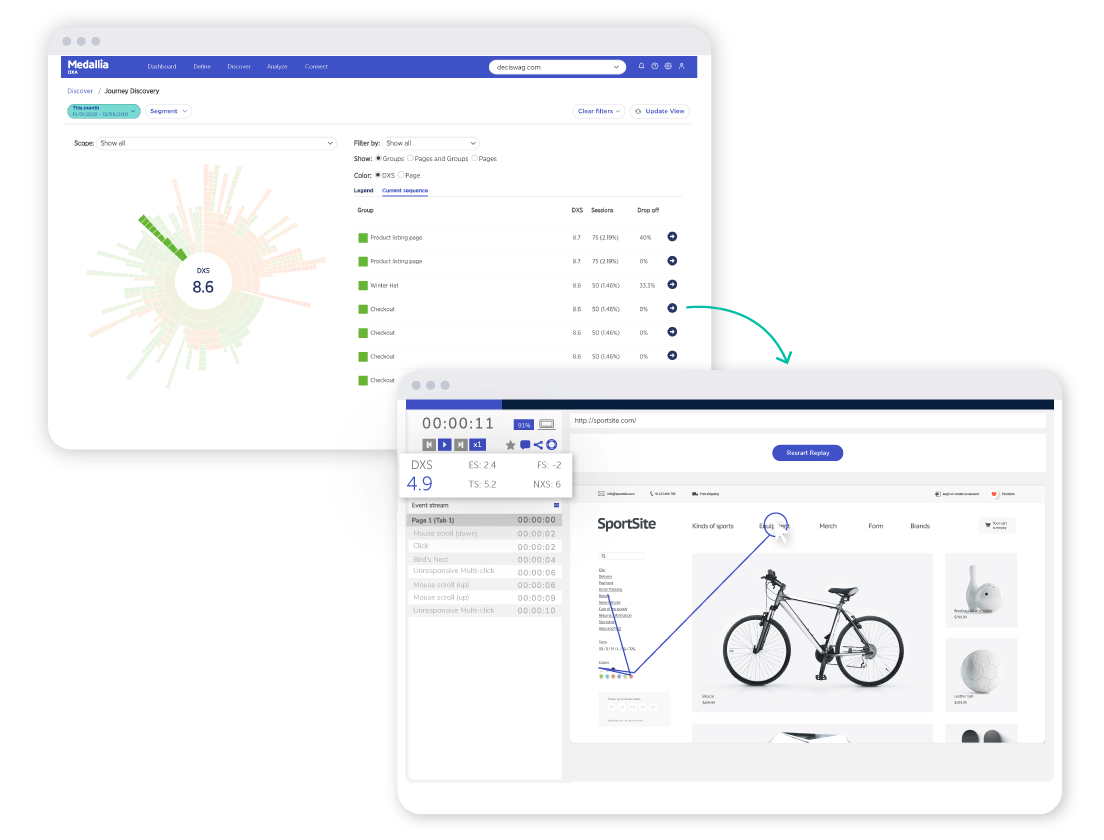

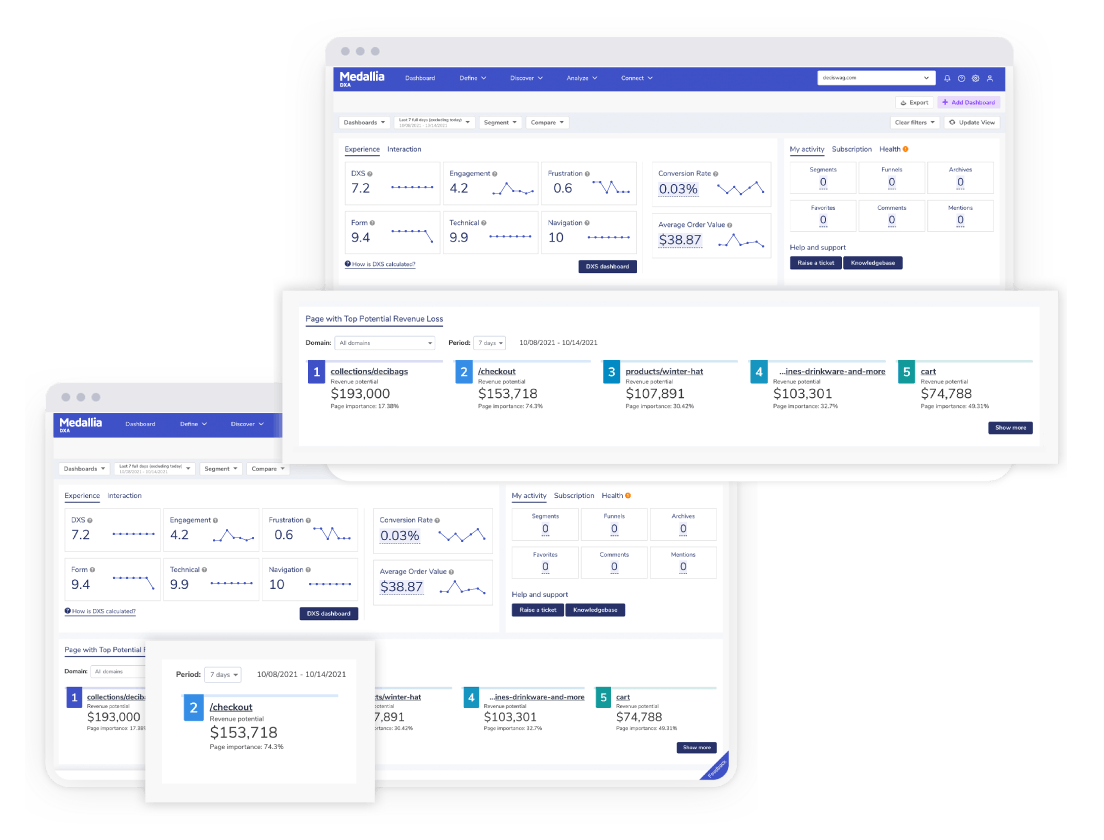

Get 100% visibility into every digital customer experience—and how to act on it—with Medallia DXA. Automatically scoring every experience, Medallia DXA instantly uncovers the biggest opportunities to improve your digital presence, strengthen customer relationships, and generate more revenue.